The AI hype cycle and emergent software

I've been writing software long enough to recognize a hype cycle when I see one. I do think LLMs are cool—this kind of stuff was only theoretical back when I published my data science book. Being able to whip up a model directly on my computer vividly illustrates the advancements in computing since my childhood days of navigating through Pathminder. But when you shake off the corporate branding from the competitive attention-seeking behavior of every company that will stop at nothing to get "AI" emblazoned over every inch of their products, you see the same thing that comes with any other massively hyped computer-related development:

- an impressive

$new_technology - an army of companies wanting

$new_technology - another army of companies building interfaces and tools for

$new_technology

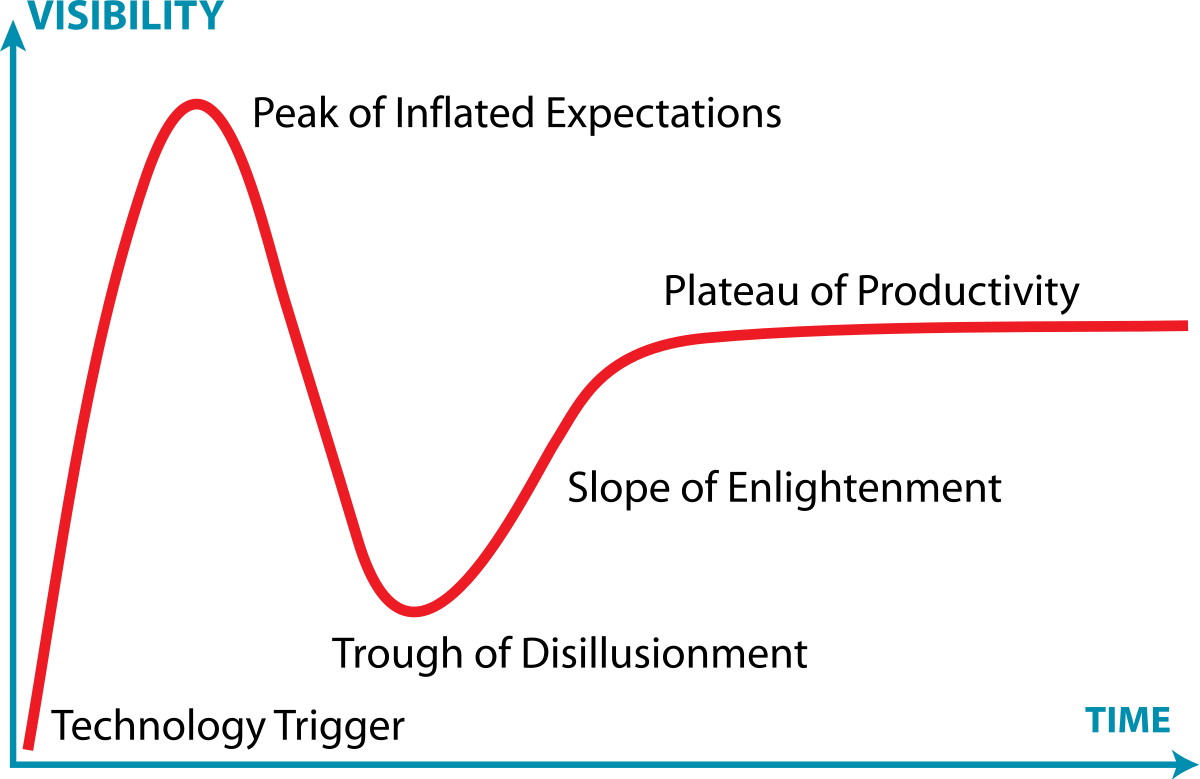

One famous conceptualization of this is the Gartner hype cycle:

To me, we're still on the left of the image. We have a long way to go.

The technology is impressive, there's no doubt about that, even if the underlying mechanism is essentially "generate contextually relevant words based on an enormous array of previous words." I've always enjoyed predictive models and especially implementing them in interesting ways, so seeing these massive prediction machines forming the start of a whole new relationship that people will have with computers is pretty awe-inspiring. We are on the precipice of finally having something like LCARS in real life.

My favorite predictive model to this day is the ID3 algorithm developed by Ross Quinlan. I like it because one aspect of your data you get to see is how often certain elements in your data were used in the derivation of a more accurate model iteration. That all seems like kid stuff today compared to what is possible with these next-word predictors, to the point that it tends to encourage some folks to surrender reason for the promise of Cognition as a Service.

Speaking of kids, I do wonder what will happen when the tinker-to-delight arc gets flipped. The other day I was thinking about one of my sons who likes to open the developer tools in the browser and play around with the HTML of web pages. It's bittersweet to see him exploring the same way I did, where you learn something by poking around and then by poking around something magic seems to happen. He's growing up in a world where he will be able to tell a computer to do anything and it will be able to do it, and that spark of joy that comes from working in a primitive fashion (HTML and JavaScript) to orchestrate abstractions on the screen will be replaced with a natural language interface that does it for you. I want to believe the joy that comes from learning computer languages will still be there in the future, but it's hard to not recognize the changing nature of things around me. There will undoubtedly be people in the next decade who call me old and stale because I still like to design and implement programming languages.

I understand the momentum around AI—I really do—I just wish these things weren't always so commoditized. I'm sure there will be some interface in the future that automatically suggests sponsored information to me. Nevertheless, there's gold out west in the land of technology and everyone can see the dollar signs—even me.

That's where my other prediction comes in: not only do I think today's hot market for "AI" in everything is standard-issue corporate hype, I also think that this technology is going to fundamentally shift software development. Yes, a lot of people are going to make a lot of money with the current trends in human-computer interaction. But no, these are not going to make programmers obsolete, and no, integrating code generation into your stack as a means of aiding your senior engineers isn't going to make your business more competitive.

What is going to change the entire system of programming is emergent software.

Emergent software is software that self-corrects and adapts. It's software that can update it's specifications in real-time based on business operations and trends. It's software that knows how to write tests in order to validate business logic, and it knows how to implement code that makes those tests pass in order to change it's underlying structure. The software itself is not a model, but rather, the ecosystem around the software will be highly autonomous generative models that have been augmented with static analysis tools, profilers, and similar affordances of the modern day optimizer working at their keyboard.

There's nothing there yet as far as I'm aware, but as someone who has a deep fascination with programming languages and how they're implemented, I can't help but think of properly tuned models as a kind of abstraction layer on top of computer programming itself. This is more than just code generation; this is software developer paradigms that are able to be embedded into model interactions that provide the kind of rich development and architecture experience one would get with some futuristic conversational version of the Rust compiler's feedback.

I'm both excited and hesitant about how this hype cycle will start stalling out. I don't think we'll ever quite get to a LLM that designs its own business automations, but I do think we will have LLMs rapidly leveraging dynamic templates of business automations. The world of corporate technology, in other words, is definitely changing. But as long as we don't lose our fascination with how these computers work and how, deep down, they're just little machines that do exactly what they're told, I think we humans will be alright in the end. We are, after all, the source of human innovation up to this point, and I'm hesitant to consider anything we have available today as something to truly displace the electric meatballs behind our eyes.

Stay curious out there.